AI Chip Market Size, Share & Industry Trends, 2032

Al Chip Market By Offerings (GPU, CPU, FPGA, NPU, TPU, Trainium, Inferentia, T-head, Athena ASIC, MTIA, LPU, Memory {DRAM (HBM, DDR)}, Network {NIC/Network Adapters, Interconnects}), Function (Training, Inference), & Region - Global Forecast to 2032

OVERVIEW

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

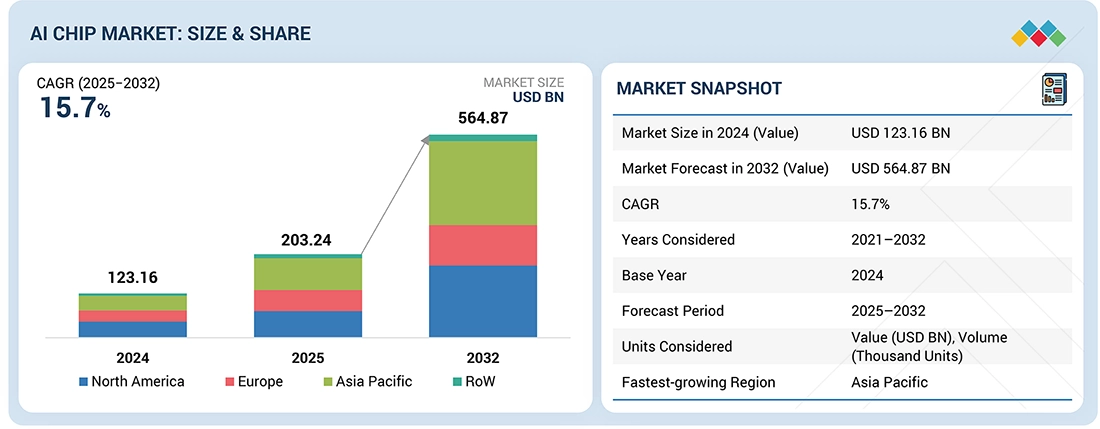

The AI chip market is projected to reach USD 564.87 billion by 2032 from USD 203.24 billion in 2025, at a CAGR of 15.7% from 2025 to 2032. The growth of the AI chip market is driven by pressing need for large-scale data handling and real-time analytics.

KEY TAKEAWAYS

-

By RegionNorth America is estimated to account for a share of 36.4% of the global AI chip market in 2025.

-

By OfferingBy offering, the network segment is expected to register the highest CAGR of 26.7%.

-

By ComputeBy compute, the CPU segment is projected to grow at the fastest rate from 2025 to 2032.

-

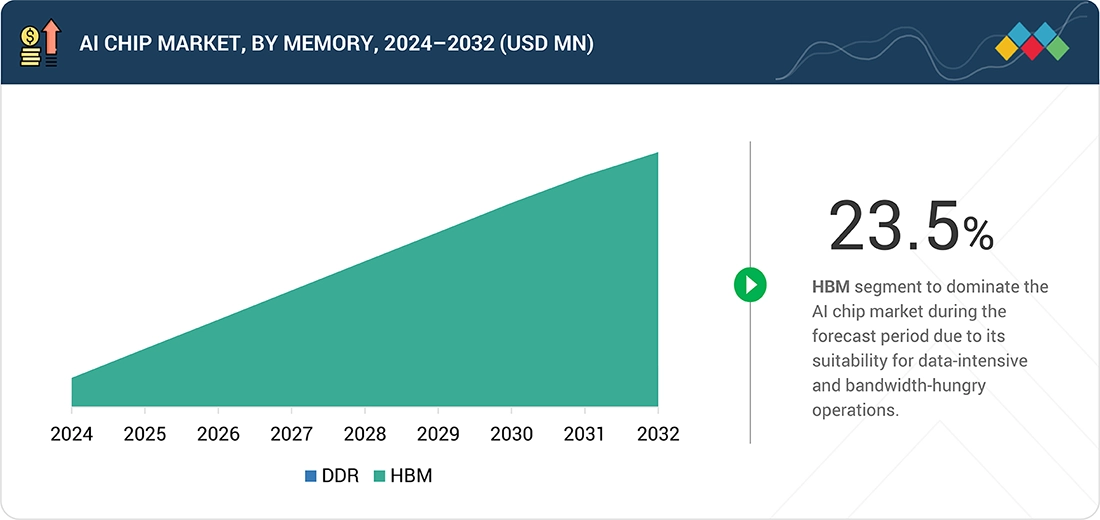

By MemoryBy memory, the HBM segment is expected to dominate the market.

-

By NetworkBy network, the NIC/network adapters segment is expected to record the fastestgrowth rate during the forecast period.

-

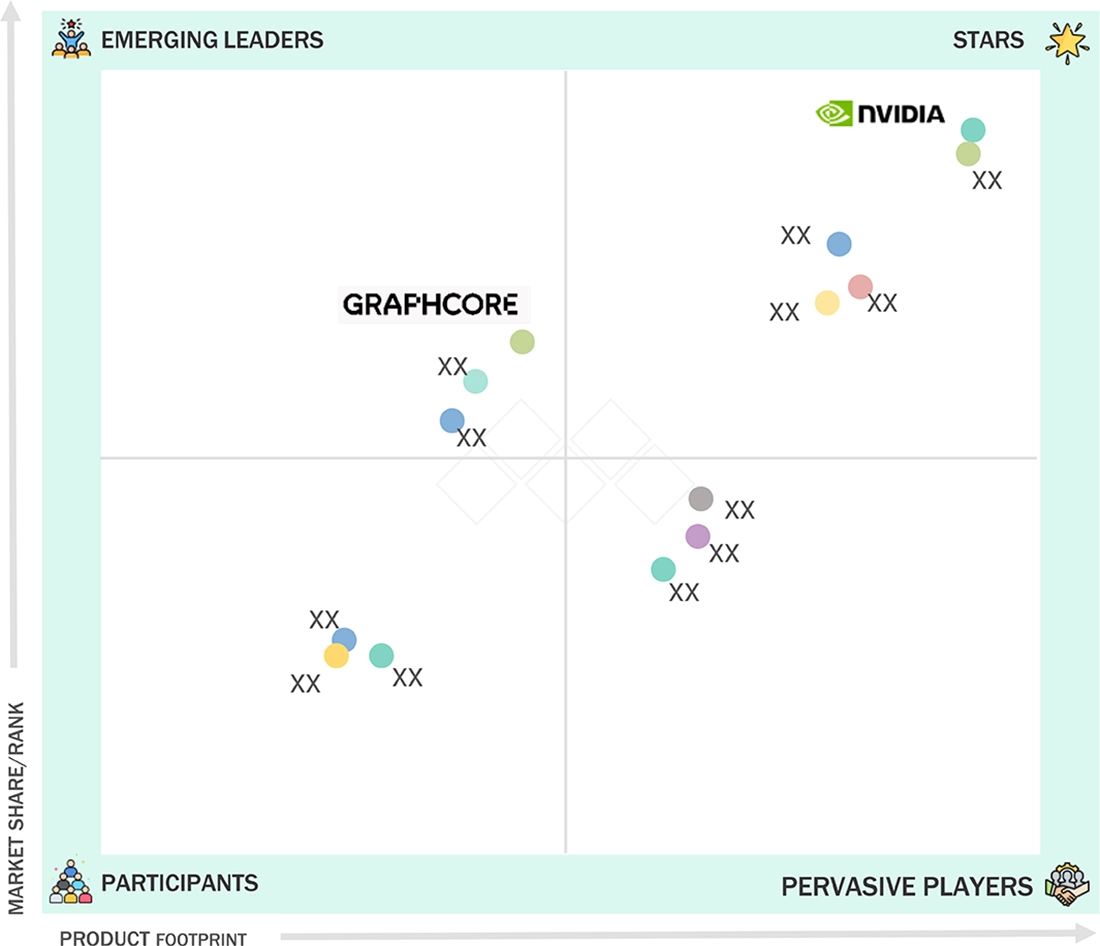

Competitive Landscape - Key PlayersNVIDIA Corporation; Advanced Micro Devices, Inc; Intel Corporation; and Micron Technology, Inc. were identified as star players in the AI chip market (global), given their strong market share and product footprint.

-

Competitive Landscape - StartupsCompanies such as Mythic; Kalray; Blaize; Groq, Inc.; HAILO TECHNOLOGIES LTD; GreenWaves Technologies; SiMa Technologies, Inc; among others have distinguished themselves as key startups and SMEs by securing strong footholds in specialized niche areas, underscoring their potential as emerging market leaders

The AI chip market is experiencing rapid expansion, fueled by soaring demand for high-performance GPUs, accelerators, and specialized processors that power large-scale training, inference, and edge intelligence workloads. Advancements in architectures such as tensor cores, chiplets, optical interconnects, and energy-efficient AI compute are accelerating adoption across cloud, enterprise, automotive, and industrial sectors. Product launches and ecosystem developments, including strategic partnerships between hyperscalers and semiconductor leaders, multi-billion-dollar supply agreements, and co-developed AI accelerator platforms are reshaping competitive dynamics. At the same time, massive investments in advanced packaging, HBM capacity, and next-generation foundry technologies are further propelling innovation and strengthening the market’s long-term growth trajectory.

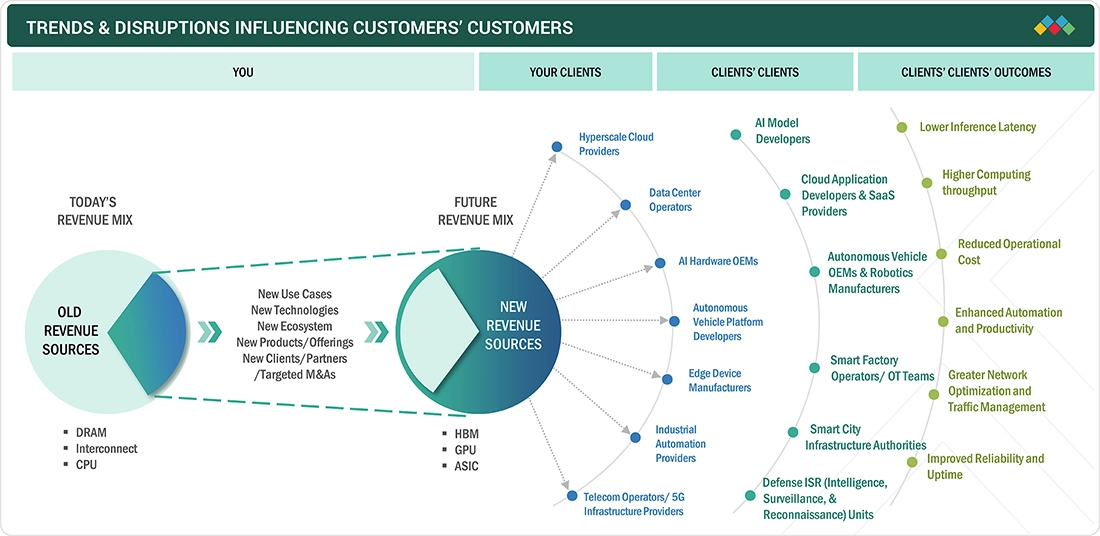

TRENDS & DISRUPTIONS IMPACTING CUSTOMERS' CUSTOMERS

The AI chip market is reshaping value creation across the technology ecosystem, extending far beyond direct semiconductor buyers. As cloud providers, data center operators, OEMs, and infrastructure vendors integrate advanced AI processors into their platforms, their downstream stakeholders ranging from AI developers and industrial operators to healthcare, automotive, and telecom teams gain access to significantly enhanced computational capabilities. This multi-tier impact ultimately delivers measurable outcomes such as faster inference, improved automation, reduced operating costs, and new intelligent services that accelerate digital transformation across enterprises and consumer markets.

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

MARKET DYNAMICS

Level

-

Surging use of GPUs and ASICs in AI servers

-

Pressing need for large-scale data handling and real-time analytics

Level

-

Computational workloads and power consumption in AI chips

-

Shortage of skilled workforce with technical know-how

Level

-

Increasing investments in AI-enabled data centers by cloud service providers

-

Government initiatives to deploy AI-enabled defense systems

Level

-

Supply chain disruptions

-

Data privacy concerns associated with AI platforms

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

Driver: Surging use of GPUs and ASICs in AI servers

There is a spike in demand for AI chips with the rising deployment of AI servers in diversified AI-powered applications across several industries, including BFSI, healthcare, retail & e-commerce, media & entertainment, and automotive. Data center owners and cloud service providers are upgrading their infrastructure to enable AI applications. The rising inclination toward using chatbots, Artificial Intelligence of Things (AIoT), predictive analytics, and natural language processing drives the need for AI servers to support these applications. These applications require powerful hardware platforms to perform complex computations and process large data volumes.

Restraint: Computational workloads and power consumption in AI Chip

Data centers and other infrastructure supporting AI workloads use GPUs and ASICs with parallel processing features. This makes them suitable for handling complex AI workloads; however, parallel processing in GPUs results in high power consumption. This increases energy costs for data centers and organizations deploying AI infrastructure. AI systems can handle large-scale AI operations; however, they also consume significant power to carry out these functions. As AI models become more complex and the volume of data increases, there is a surge in power demands for AI chips. Excessive power consumption results in excessive heating, which can only be handled by more advanced cooling systems. This adds to the complexity and cost of infrastructure. GPUs and ASICs work in parallel with thousands of cores. This requires immense computational power to carry out advanced AI workloads, including deep learning training and large-scale simulations.

Opportunity: Increasing investments in AI-enabled data centers by cloud service providers

Cloud service providers (CSPs) are making massive investments in scaling and upgrading data center infrastructures to support accelerating demand for AI-based applications and services. Most investments that CSPs make in data centers aim to attain scalability and operational efficiency. As they increase their cloud services, demand for AI chips is likely to increase, creating growth opportunities for AI chip providers. For instance, AWS (US) declared an investment of USD 5.30 billion into constructing cloud data centers in Saudi Arabia. Similarly, in November 2023, Microsoft (US) declared its plan to build several new data centers in Quebec, expanding across Canada. In the next two years, it will invest USD 500 million to build up its cloud computing and AI infrastructure in Quebec. It needs state-of-the-art AI chips powered by GPUs, TPUs, and AI accelerators to take control of the ever-increasing computational requirements in AI training and inference.

Challenge: Supply chain disruptions

Supply chain disruption is one of the major challenges faced by players in the AI chip market. It affects the production quantity, delivery time, and, ultimately, the cost of processors. Component shortages result from either the lack of sufficient semiconductor material or limited production capacity, which creates significant production delays. Production delays may also occur due to equipment breakdown or the complexity of processing cutting-edge AI chips. There is a greater demand for high-performance GPUs with faster real-time large language model (LLM) training and inference capabilities. This can further increase the time to market. Thus, supply chain disruptions significantly impact the entire AI chip market.

artificial-intelligence-chipset-market: COMMERCIAL USE CASES ACROSS INDUSTRIES

| COMPANY | USE CASE DESCRIPTION | BENEFITS |

|---|---|---|

|

OVH SAS integrated 4th Gen AMD EPYC processors into its Bare Metal server lineup to boost performance and reliability for demanding AI inference workloads. | The deployment delivered a 15–20% performance uplift, higher resilience, and improved core density, enabling OVH SAS to provide more cost-efficient, high-performance cloud solutions. |

|

Tencent adopted 3rd Gen Intel Xeon Scalable Processors with advanced acceleration features to power its Xiaowei intelligent speech and video service platform, enabling high-quality neural TTS processing. | The Intel-optimized solution enhanced speech synthesis performance, delivering faster, more efficient TTS capabilities for enterprises and intelligent device vendors. |

|

AIC deployed AMD EPYC-powered custom servers to build Western Digital’s high-density SSD test and validation chamber, enabling faster and more flexible drive testing in a compact environment. | The solution enhanced batch processing speeds, improved overall QA efficiency, and ensured rigorous SSD reliability validation to protect customer data. |

Logos and trademarks shown above are the property of their respective owners. Their use here is for informational and illustrative purposes only.

MARKET ECOSYSTEM

The AI chip ecosystem consists of chip designers (Analog Devices, Texas Instruments, SK HYNIX, Intel, Samsung), semiconductor manufacturers (TSMC, Intel, ASML, Lam Research), chip providers (NVIDIA, AMD, Google, and end users (Siemens, Google, AWS, Microsoft). Designers create advanced processor architectures that are fabricated by manufacturing partners using leading-edge semiconductor equipment. Chip providers deliver high-performance AI accelerators that power cloud, enterprise, and edge applications. End users drive demand for faster compute, energy efficiency, and scalable AI workloads, while close collaboration across the value chain enables continuous innovation and market expansion.

Logos and trademarks shown above are the property of their respective owners. Their use here is for informational and illustrative purposes only.

MARKET SEGMENTS

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

AI Chip Market, by Compute

The GPU segment is expected to hold the largest market share throughout the forecast period. GPUs can effectively handle huge computational loads required to train and run deep learning models using complex matrix multiplications. This makes them vital in data centers and AI research, where the rapid growth of AI applications requires efficient hardware solutions. New GPUs, which enhance AI capabilities not only for data centers but also at the edge, are constantly developed and released by major manufacturers such as NVIDIA Corporation (US), Intel Corporation (US), and Advanced Micro Devices, Inc. (US). For example, in November 2023, NVIDIA Corporation released an upgraded HGX H200 platform based on Hopper architecture featuring the H200 Tensor core GPU. The first GPU to pack HBM3e memory provides 141 GB of memory at a blazing speed of 4.8 terabytes per second.

AI Chip Market, by Function

the inference function accounted for the largest market share and is estimated to register the highest CAGR during the forecast period. Inference leverages pre-trained AI models to make accurate predictions or timely decisions based on new data. With businesses shifting toward AI integration to improve production efficiency, enhance customer experience, and drive innovation, there is a growing need for robust inference capabilities in the data center. Data centers are rapidly scaling up their AI capabilities, highlighting the importance of efficiency and performance in inference processing. A critical factor fostering the growth of the AI chip market is the elevating requirement for more energy-efficient and high-performing inference chips.

AI Chip Market, by Technology

Generative AI technology is likely to dominate the AI chip market throughout the forecast period. There is an exponential increase in the demand for AI models that can generate high-quality content, including text, images, and codes. As GenAI models are becoming more complex, there is a high requirement for AI chips with higher processing capabilities and memory bandwidth from data center service providers. GenAI applications are also adopted at a significantly high rate across various enterprises, including retail & e-commerce, BFSI, healthcare, media & entertainment, in dynamic applications, such as NLP, content generation, and automated design generation and process. The rising demand for GenAI solutions across these industries is expected to fuel the AI chip market growth in the coming years.

REGION

Asia Pacific to be fastest-growing region in global AI chip market during forecast period

The AI chip market in Asia Pacific is poised to grow at the highest CAGR during the forecast period. The escalating adoption of AI technologies in countries such as China, South Korea, India, and Japan will stimulate market growth. AI research and development (R&D) activities receive significant funding from regional government entities, fostering a favorable environment for AI developments. Additionally, the presence of high-bandwidth memory (HBM) tech giants, such as Samsung (South Korea), Micron Technology Inc. (US), and SK HYNIX (South Korea), which have dedicated HBM manufacturing facilities in South Korea, Taiwan, and China, will further boost the AI chip market growth in Asia Pacific in the next few years.

artificial-intelligence-chipset-market: COMPANY EVALUATION MATRIX

In the AI chip market matrix, NVIDIA (Star) leads with a dominant market share and a broad, mature product portfolio spanning data center GPUs, AI accelerators, and integrated software ecosystems that power training and inference at scale. Graphcore (Emerging Leader) is gaining strong industry attention with its innovative Intelligence Processing Units (IPUs) and purpose-built architectures for high-efficiency AI computation, positioning itself as a differentiated challenger in specialized workloads. While NVIDIA maintains its leadership through scale, ecosystem depth, and continuous architectural advancements, Graphcore demonstrates clear potential to advance toward the leaders’ quadrant as demand for alternative, energy-efficient AI architectures accelerates across global markets.

Source: Secondary Research, Interviews with Experts, MarketsandMarkets Analysis

KEY MARKET PLAYERS

- NVIDIA Corporation (US)

- Advanced Micro Devices, Inc. (US)

- Intel Corporation (US)

- Micron Technology, Inc. (US)

- Google (US)

- SK HYNIX INC. (South Korea)

- Qualcomm Technologies, Inc. (US)

- SAMSUNG (South Korea)

- Huawei Technologies Co., Ltd. (China)

- Apple Inc. (US)

- Imagination Technologies (UK)

- Graphcore (UK)

- Cerebras (US)

- Groq, Inc. (US)

MARKET SCOPE

| REPORT METRIC | DETAILS |

|---|---|

| Market Size in 2024 (Value) | USD 123.16 Billion |

| Market Forecast in 2032 (Value) | USD 564.87 Billion |

| Growth Rate | CAGR of 15.7% from 2025-2032 |

| Years Considered | 2021-2032 |

| Base Year | 2024 |

| Forecast Period | 2025-2032 |

| Units Considered | Value (USD Billion), Volume (Kiloton) |

| Report Coverage | Revenue Forecast, Company Ranking, Competitive Landscape, Growth Factors, and Trends |

| Segments Covered |

|

| Regions Covered | North America, Asia Pacific, Europe, South America, Middle East, Africa |

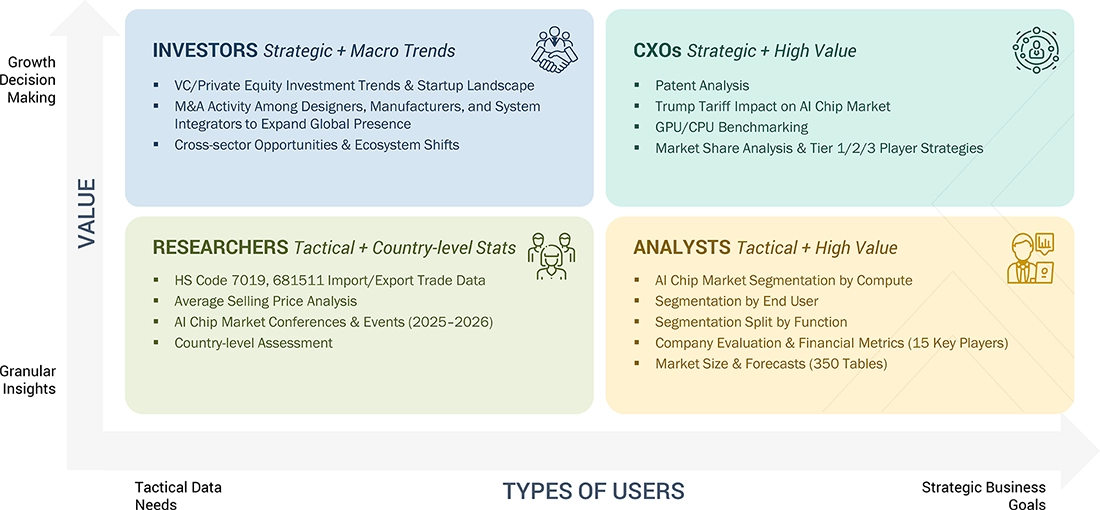

WHAT IS IN IT FOR YOU: artificial-intelligence-chipset-market REPORT CONTENT GUIDE

DELIVERED CUSTOMIZATIONS

We have successfully delivered the following deep-dive customizations:

| CLIENT REQUEST | CUSTOMIZATION DELIVERED | VALUE ADDS |

|---|---|---|

| Hyperscale Cloud Provider |

|

|

| AI Hardware OEM (Servers/Workstations) |

|

|

| Semiconductor Designer (CPU/GPU/Accelerators) |

|

|

| Data Center Operator/Colocation Provider |

|

|

| AI Model Developers/Cloud AI Teams |

|

|

RECENT DEVELOPMENTS

- January 2025 : NVIDIA introduced its next-generation Blackwell Ultra GPUs for hyperscale data centers, delivering major improvements in training throughput for large language models. Several cloud providers, including AWS and Google Cloud, announced early integration plans into their AI compute clusters.

- November 2024 : Intel launched the Xeon 6 platform and updated Gaudi 3 AI accelerators, targeting cost-efficient generative AI training and inference. The company secured partnerships with Dell and Lenovo to integrate the platform into new enterprise AI servers.

- October 2024 : AMD unveiled the Instinct MI325X accelerator, offering expanded memory and improved efficiency for transformer-based workloads. Microsoft and Meta announced deployments to support scaling of next-gen AI models.

- August 2024 : TSMC confirmed volume production of its 2 nm process node, enabling advanced AI chips for customers such as Apple and NVIDIA. The node promises significantly lower power consumption and higher transistor density for high-performance AI compute.

- June 2024 : Google introduced TPU v5p, optimized for large-scale training of multimodal AI systems. The TPU is deployed within Google Cloud’s AI Hypercomputer architecture, offering enhanced interconnect speeds and higher model parallelism.

- April 2024 : Graphcore expanded its IPU-based compute systems through a partnership with Fujitsu, integrating Graphcore AI platforms into Fujitsu’s enterprise AI infrastructure for inference-intensive workloads.

- February 2024 : Samsung announced mass production of HBM3E high-bandwidth memory, aimed at next-generation AI accelerators. NVIDIA and AMD were among the first customers to adopt the new memory standard to enhance AI training performance.

Table of Contents

Methodology

The research process for this study involved the systematic gathering, recording, and analysis of data on customers and companies operating in the AI chip market. This process involved the extensive use of secondary sources, directories, and databases (Factiva and Oanda) to identify and collect valuable information for a comprehensive, technical, market-oriented, and commercial study of the AI chip market. In-depth interviews were conducted with primary respondents, including experts from core and related industries, as well as preferred manufacturers, to obtain and verify critical qualitative and quantitative information, and to assess growth prospects. Key players in the AI chip market were identified through secondary research, and their market rankings were determined through a combination of primary and secondary research. This research involved studying the annual reports of top players and conducting interviews with key industry experts, including CEOs, directors, and marketing executives.

Secondary Research

During the secondary research process, various sources were utilized to identify and collect information relevant to this study. These include annual reports, press releases, and investor presentations from companies, as well as white papers, technology journals, certified publications, articles by recognized authors, directories, and databases.

Secondary research was primarily used to gather key information about the industry's value chain, the total pool of market players, the classification of the market according to industry trends, and regional markets, as well as key developments from both market- and technology-oriented perspectives.

Primary Research

Primary research was also conducted to identify the segmentation types, key players, competitive landscape, and key market dynamics, including drivers, restraints, opportunities, challenges, and industry trends, as well as the key strategies adopted by players operating in the AI chip market. Extensive qualitative and quantitative analyses were performed on the complete market engineering process to list key information and insights throughout the report.

Extensive primary research has been conducted following the acquisition of knowledge about the AI chip market scenario through secondary research. Several primary interviews have been conducted with experts from both the demand side (end use and region) and the supply side (offering, technology, and function) across four major geographic regions: North America, Europe, Asia Pacific, and RoW. Approximately 80% and 20% of the primary interviews were conducted from the supply and demand sides, respectively. This primary data was collected through questionnaires, emails, and telephonic interviews.

Note: Other designations include technology heads, media analysts, sales managers, marketing managers, and product managers.

The three tiers of the companies are based on their total revenues as of 2024 ? Tier 1: >USD 1 billion, Tier 2: USD 500 million–1 billion, and Tier 3: USD 500 million.

To know about the assumptions considered for the study, download the pdf brochure

Market Size Estimation

Throughout the comprehensive market engineering process, both top-down and bottom-up approaches were employed, along with several data triangulation methods, to estimate and validate the size of the AI chip market and its various dependent submarkets. Key players in the market were identified through secondary research, and their market share in the respective regions was determined through a combination of primary and secondary research. This entire research methodology involved studying the annual and financial reports of the top players, as well as conducting interviews with experts (including CEOs, VPs, directors, and marketing executives) to gather key insights (both quantitative and qualitative).

All percentage shares, splits, and breakdowns were determined using secondary sources and verified through primary sources. All the possible parameters that affect the markets covered in this research study were accounted for, viewed in detail, verified through primary research, and analyzed to obtain the final quantitative and qualitative data. This data was consolidated and supplemented with detailed inputs and analysis from MarketsandMarkets and presented in this report.

Bottom-Up Approach

- Initially, the companies offering AI chips were identified. Their products were categorized based on compute, memory, network, technology, function, and end user.

- After understanding the different types of AI chips offered by various manufacturers, the market was categorized into segments based on the data gathered through primary and secondary sources.

- To derive the global AI chip market, global chip shipments of top players for AI servers considered in the report's scope were tracked.

- A suitable penetration rate was assigned for compute, memory, and network offerings to derive the shipments of AI chips.

- We derived the AI chip market based on different offerings using the average selling price (ASP) at which a particular company offers its devices. The ASP of each offering was identified based on secondary sources and validated through primary sources.

- For the CAGR, a market trend analysis was conducted by examining the industry penetration rate, as well as the demand and supply of AI chips for various end users.

- The AI chip market is also tracked through the data sanity method. The revenues of key providers were analyzed through annual reports and press releases and summed to derive the overall market.

- For each company, a percentage is assigned to its overall revenue or, in a few cases, segmental revenue to derive its revenue for the AI chips. This percentage for each company is assigned based on its product portfolio and the range of AI chip offerings it provides.

- The estimates at every level were verified and cross-checked through discussions with key opinion leaders, including CXOs, directors, and operations managers, and subsequently validated by domain experts at MarketsandMarkets.

- Various paid and unpaid sources of information, such as annual reports, press releases, white papers, and databases, were studied.

Top-Down Approach

- The global market size of AI chips was estimated based on data from major companies.

- The growth of the AI chip market exhibited an upward trend during the studied period, as it is currently in the initial stage of the product cycle, with major players beginning to expand their business into various market application areas.

- The types of AI chips, their features and properties, geographic presence, and key applications served by all players in the AI chip market were studied to estimate and determine the percentage split of the segments.

- Different types of AI chip offerings, including compute, memory, and network, and their penetration among end users, were also studied.

- Based on secondary research, the market was categorized by compute, memory, network, technology, function, and end user.

- The demand generated by companies operating in different end-use application segments was analyzed.

- Multiple discussions were conducted with key opinion leaders across major companies involved in developing AI chips and related components to validate the market split by compute, memory, network, technology, function, and end user.

- The regional splits were estimated using secondary sources, based on factors such as the number of players in a specific country and region, as well as the adoption and use cases of each implementation type in relation to applications within the region.

Al Chip Market : Top-Down and Bottom-Up Approach

Data Triangulation

After determining the overall market size through the market size estimation process explained earlier, the total market was divided into several segments and subsegments. Data triangulation and market breakdown procedures were employed to complete the overall market engineering process and derive precise statistics for all segments and subsegments, as applicable. The data was triangulated by studying various factors and trends from both the demand and supply sides. Additionally, the AI chip market size was validated using both top-down and bottom-up approaches.

Market Definition

An AI chip is a type of specialized processor designed to efficiently perform artificial intelligence tasks, particularly in machine learning, natural language processing, generative AI, computer vision, and neural network computations. These chips are capable of conducting parallel processing in complex AI operations, including AI training and inference, allowing for faster execution of AI workloads compared to general-purpose processors.

Key Stakeholders

- Government and financial institutions, and investment communities

- Analysts and strategic business planners

- Semiconductor product designers and fabricators

- Application providers

- AI solution providers

- AI platform providers

- Business providers

- Professional service/solution providers

- Research organizations

- Technology standard organizations, forums, alliances, and associations

- Technology investors

Report Objectives

- To define, describe, and forecast the AI chip market based on offering, function, technology, and end user

- To forecast the size of the market segments for four major regions: North America, Europe, Asia Pacific, and the Rest of the World (RoW)

- To forecast the size and market segments of the AI chip market by volume based on offerings

- To provide detailed information regarding drivers, restraints, opportunities, and challenges influencing the growth of the market

- To provide an ecosystem analysis, case study analysis, patent analysis, technology analysis, pricing analysis, Porter's five forces analysis, investment and funding scenario, and regulations pertaining to the market

- To provide a detailed overview of the value chain analysis of the AI chip ecosystem

- To strategically analyze micro markets with regard to individual growth trends, prospects, and contributions to the total market

- To analyze opportunities for stakeholders by identifying high-growth segments of the market

- To strategically profile the key players, comprehensively analyze their market positions in terms of ranking and core competencies, and provide a competitive landscape of the market

- To analyze strategic approaches such as product launches, acquisitions, agreements, and partnerships in the AI chip market

- To understand and analyze the impact of the 2025 US trump tariff on the AI chip market

Available Customizations

With the given market data, MarketsandMarkets offers customizations according to the company’s specific needs. The following customization options are available for the report:

Company Information

- Detailed analysis and profiling of additional market players (up to 5)

Need a Tailored Report?

Customize this report to your needs

Get 10% FREE Customization

Customize This ReportPersonalize This Research

- Triangulate with your Own Data

- Get Data as per your Format and Definition

- Gain a Deeper Dive on a Specific Application, Geography, Customer or Competitor

- Any level of Personalization

Let Us Help You

- What are the Known and Unknown Adjacencies Impacting the Al Chip Market

- What will your New Revenue Sources be?

- Who will be your Top Customer; what will make them switch?

- Defend your Market Share or Win Competitors

- Get a Scorecard for Target Partners

Custom Market Research Services

We Will Customise The Research For You, In Case The Report Listed Above Does Not Meet With Your Requirements

Get 10% Free Customisation

Growth opportunities and latent adjacency in Al Chip Market